This opencv tutorial is about adding a TrackBar to the specified Window:

Tracking means following the movement of something inorder to find them or know their route.

Bar-here refers to the counter.

Thus in opencv we add trackbar to the window so that we can follow the values of a particular function .

createTrackbar : create a trackbar (slider) to the specified window.

Syntax:

C++: int createTrackbar(const string& trackbarname, const string& winname, int* value, int count, TrackbarCallback onChange=0, void* userdata=0)

Parameters:

trackbarname – Name of the created trackbar.

winname – Name of the window that will be used as a parent of the created trackbar.

value – Optional pointer to an integer variable whose value reflects the position of the slider. Upon creation, the slider position is defined by this variable.

count – Maximal position of the slider. The minimal position is always 0.

onChange – Pointer to the function to be called every time the slider changes position. This function should be prototyped as void Foo(int,void*); , where the first parameter is the trackbar position and the second parameter is the user data (see the next parameter). If the callback is the NULL pointer, no callbacks are called, but only value is updated.

userdata – User data that is passed as is to the callback. It can be used to handle trackbar events without using global variables.

The function createTrackbar creates a trackbar with a particular name and range specified by the user and assigns a variable value synchronized to the slider’s position on the trackbar upon changing with onChange function is evoked.The created trackbar is displayed on the specified window winname.

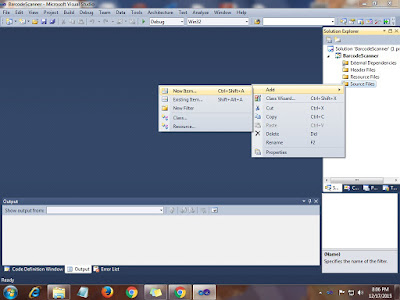

Here is the Opencv Code for Adding a Trackbar to the Specified window for changing the intensity value of an Image:

Bar-here refers to the counter.

Thus in opencv we add trackbar to the window so that we can follow the values of a particular function .

createTrackbar : create a trackbar (slider) to the specified window.

Syntax:

C++: int createTrackbar(const string& trackbarname, const string& winname, int* value, int count, TrackbarCallback onChange=0, void* userdata=0)

Parameters:

trackbarname – Name of the created trackbar.

winname – Name of the window that will be used as a parent of the created trackbar.

value – Optional pointer to an integer variable whose value reflects the position of the slider. Upon creation, the slider position is defined by this variable.

count – Maximal position of the slider. The minimal position is always 0.

onChange – Pointer to the function to be called every time the slider changes position. This function should be prototyped as void Foo(int,void*); , where the first parameter is the trackbar position and the second parameter is the user data (see the next parameter). If the callback is the NULL pointer, no callbacks are called, but only value is updated.

userdata – User data that is passed as is to the callback. It can be used to handle trackbar events without using global variables.

The function createTrackbar creates a trackbar with a particular name and range specified by the user and assigns a variable value synchronized to the slider’s position on the trackbar upon changing with onChange function is evoked.The created trackbar is displayed on the specified window winname.

Here is the Opencv Code for Adding a Trackbar to the Specified window for changing the intensity value of an Image:

//Trackbar for changing Intensity Values of an Image

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

using namespace cv;

/// Global Variables

const int intensity_slider_max = 10;

int slider_value=1;

/// Matrices to store images

Mat src1,dst;

//Callback Function for Trackbar

void on_trackbar( int, void* )

{

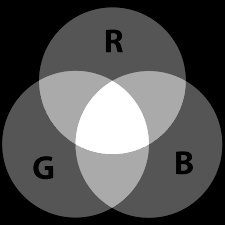

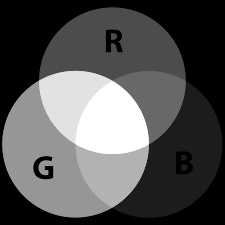

dst=src1/slider_value;

imshow("Intensity Change", dst);

}

int main( int argc, char** argv )

{

/// Read image ( same size, same type )

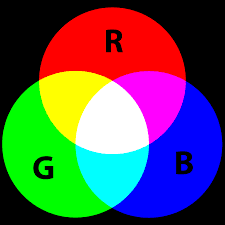

src1 = imread("C:\\Users\\arjun\\Desktop\\opencv-logo.jpg",CV_LOAD_IMAGE_COLOR);

if( !src1.data ) { printf("Error loading src1 \n"); return -1; };

/// Create Windows

namedWindow("Intensity Change", 1);

namedWindow("Original Image",CV_WINDOW_AUTOSIZE);

imshow("Original Image", src1);

///Create Trackbar

createTrackbar( "Intensity", "Intensity Change", &slider_value, intensity_slider_max, on_trackbar );

/// trackbar on_change function

on_trackbar( slider_value, 0 );

/// Wait until user press some key

waitKey(0);

return 0;

}