This Opencv C++ Tutorial is about Object Detection and Recognition Using SURF.

For Feature Extraction and Detection Using SURF Refer:-

Opencv C++ Code with Example for Feature Extraction and Detection using SURF Detector

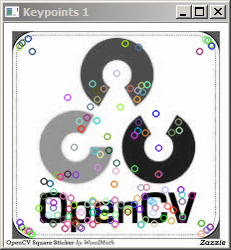

So, in the previous tutorial we learnt about object recognition and how to detect and extract features from an object using

SURF.

In this Opencv Article we are going to match those features of an object with the background image, thus performing object recognition.

Here are few of the Syntaxes along with its description which are used for Objection Recognition Using SURF.

DescriptorExtractor:

It is an abstract base class for computing descriptors for image keypoints.

DescriptorExtractor::compute

Computes the descriptors for a set of keypoints detected in an image (first variant) or image set (second variant).

Syntax:

C++: void DescriptorExtractor::compute(const Mat& image, vector<KeyPoint>& keypoints, Mat& descriptors)

C++: void DescriptorExtractor::compute(const vector<Mat>& images, vector<vector<KeyPoint>>& keypoints, vector<Mat>& descriptors)

Parameters:

image – Image.

images – Image set.

keypoints – Input collection of keypoints. Keypoints for which a descriptor cannot be computed are removed and the remaining ones may be reordered. Sometimes new keypoints can be added, for example: SIFT duplicates a keypoint with several dominant orientations (for each orientation).

descriptors – Computed descriptors. In the second variant of the method descriptors[i] are descriptors computed for a keypoints[i]. Row j is the keypoints (or keypoints[i]) is the descriptor for keypoint j-th keypoint.

DescriptorMatcher

It is an abstract base class for matching keypoint descriptors. It has two groups of match methods: for matching descriptors of an image with another image or with an image set.

DescriptorMatcher::match

It finds the best match for each descriptor from a query set.

C++: void DescriptorMatcher::match(const Mat& queryDescriptors, const Mat& trainDescriptors, vector<DMatch>& matches, const Mat& mask=Mat() )

C++: void DescriptorMatcher::match(const Mat& queryDescriptors, vector<DMatch>& matches, const vector<Mat>& masks=vector<Mat>() )

Parameters:

queryDescriptors – Query set of descriptors.

trainDescriptors – Train set of descriptors. This set is not added to the train descriptors collection stored in the class object.

matches – Matches. If a query descriptor is masked out in mask , no match is added for this descriptor. So, matches size may be smaller than the query descriptors count.

mask – Mask specifying permissible matches between an input query and train matrices of descriptors.

masks – Set of masks. Each masks[i] specifies permissible matches between the input query descriptors and stored train descriptors from the i-th image trainDescCollection[i].

Explaination:

In the first variant of this method, the train descriptors are passed as an input argument. In the second variant of the method, train descriptors collection that was set by DescriptorMatcher::add is used. Optional mask (or masks) can be passed to specify which query and training descriptors can be matched. Namely, queryDescriptors[i] can be matched with trainDescriptors[j] only if mask.at<uchar>(i,j) is non-zero.

DrawMatches

Draws the found matches of keypoints from two images.

Syntax:

C++: void drawMatches(const Mat& img1, const vector<KeyPoint>& keypoints1, const Mat& img2, const vector<KeyPoint>& keypoints2, const vector<DMatch>& matches1to2, Mat& outImg, const Scalar& matchColor=Scalar::all(-1), const Scalar& singlePointColor=Scalar::all(-1), const vector<char>& matchesMask=vector<char>(), int flags=DrawMatchesFlags::DEFAULT )

C++: void drawMatches(const Mat& img1, const vector<KeyPoint>& keypoints1, const Mat& img2, const vector<KeyPoint>& keypoints2, const vector<vector<DMatch>>& matches1to2, Mat& outImg, const Scalar& matchColor=Scalar::all(-1), const Scalar& singlePointColor=Scalar::all(-1), const vector<vector<char>>& matchesMask=vector<vector<char> >(), int flags=DrawMatchesFlags::DEFAULT )

Parameters:

img1 – First source image.

keypoints1 – Keypoints from the first source image.

img2 – Second source image.

keypoints2 – Keypoints from the second source image.

matches1to2 – Matches from the first image to the second one, which means that keypoints1[i] has a corresponding point in keypoints2[matches[i]] .

outImg – Output image. Its content depends on the flags value defining what is drawn in the output image. See possible flags bit values below.

matchColor – Color of matches (lines and connected keypoints). If matchColor==Scalar::all(-1) , the color is generated randomly.

singlePointColor – Color of single keypoints (circles), which means that keypoints do not have the matches. If singlePointColor==Scalar::all(-1) , the color is generated randomly.

matchesMask – Mask determining which matches are drawn. If the mask is empty, all matches are drawn.

flags – Flags setting drawing features. Possible flags bit values are defined by DrawMatchesFlags.

Explaination:

This function draws matches of keypoints from two images in the output image. Match is a line connecting two keypoints (circles).

//OPENCV C++ Tutorial:Object Detection Using SURF detector

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/nonfree.hpp"

using namespace cv;

using namespace std;

int main()

{

//Load the Images

Mat image_obj = imread( "C:\\Users\\arjun\\Desktop\\image_object.png", CV_LOAD_IMAGE_GRAYSCALE );

Mat image_scene = imread( "C:\\Users\\arjun\\Desktop\\background_scene.png", CV_LOAD_IMAGE_GRAYSCALE );

//Check whether images have been loaded

if( !image_obj.data)

{

cout<< " --(!) Error reading image1 " << endl;

return -1;

}

if( !image_scene.data)

{

cout<< " --(!) Error reading image2 " << endl;

return -1;

}

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian);

vector<KeyPoint> keypoints_obj,keypoints_scene;

detector.detect( image_obj, keypoints_obj );

detector.detect( image_scene, keypoints_scene );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_obj, descriptors_scene;

extractor.compute( image_obj, keypoints_obj, descriptors_obj );

extractor.compute( image_scene, keypoints_scene, descriptors_scene );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_obj, descriptors_scene, matches );

Mat img_matches;

drawMatches( image_obj, keypoints_obj, image_scene, keypoints_scene,

matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//--Step3: Show detected (drawn) keypoints

imshow("DetectedImage", img_matches );

waitKey(0);

return 0;

}

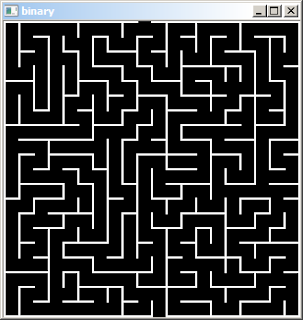

Input Image:

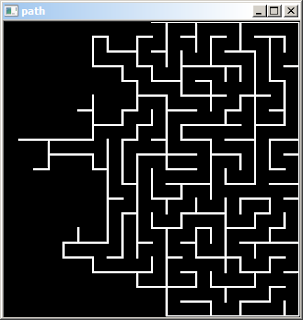

Backround Image:

Output Image:

Now, we have marked the keypoints of the object which needs to be detected to that of the keypoints of the object in background image.

The next step is to mark the object in the scene with a rectangle.So that it would be easy for us to identify the object in the background image.

Here is the Opencv C++ code for it:

//OPENCV C++ Tutorial:Object Detection Using SURF detector

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace cv;

using namespace std;

int main()

{

//Load the Images

Mat image_obj = imread( "C:\\Users\\arjun\\Desktop\\image_object.png", CV_LOAD_IMAGE_GRAYSCALE );

Mat image_scene = imread( "C:\\Users\\arjun\\Desktop\\background_scene.png", CV_LOAD_IMAGE_GRAYSCALE );

//Check whether images have been loaded

if( !image_obj.data)

{

cout<< " --(!) Error reading image1 " << endl;

return -1;

}

if( !image_scene.data)

{

cout<< " --(!) Error reading image2 " << endl;

return -1;

}

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian);

vector<KeyPoint> keypoints_obj,keypoints_scene;

detector.detect( image_obj, keypoints_obj );

detector.detect( image_scene, keypoints_scene );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_obj, descriptors_scene;

extractor.compute( image_obj, keypoints_obj, descriptors_obj );

extractor.compute( image_scene, keypoints_scene, descriptors_scene );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_obj, descriptors_scene, matches );

Mat img_matches;

drawMatches( image_obj, keypoints_obj, image_scene, keypoints_scene,

matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Step 4: Localize the object

vector<Point2f> obj;

vector<Point2f> scene;

for( int i = 0; i < matches.size(); i++ )

{

//-- Step 5: Get the keypoints from the matches

obj.push_back( keypoints_obj [matches[i].queryIdx ].pt );

scene.push_back( keypoints_scene[ matches[i].trainIdx ].pt );

}

//-- Step 6:FindHomography

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Step 7: Get the corners of the object which needs to be detected.

vector<Point2f> obj_corners(4);

obj_corners[0] = cvPoint(0,0);

obj_corners[1] = cvPoint( image_obj.cols, 0 );

obj_corners[2] = cvPoint( image_obj.cols, image_obj.rows );

obj_corners[3] = cvPoint( 0, image_obj.rows );

//-- Step 8: Get the corners of the object form the scene(background image)

std::vector<Point2f> scene_corners(4);

//-- Step 9:Get the perspectiveTransform

perspectiveTransform( obj_corners, scene_corners, H);

//-- Step 10: Draw lines between the corners (the mapped object in the scene - image_2 )

line( img_matches, scene_corners[0] + Point2f( image_obj.cols, 0), scene_corners[1] + Point2f( image_obj.cols, 0), Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + Point2f( image_obj.cols, 0), scene_corners[2] + Point2f( image_obj.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + Point2f( image_obj.cols, 0), scene_corners[3] + Point2f( image_obj.cols, 0), Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + Point2f( image_obj.cols, 0), scene_corners[0] + Point2f( image_obj.cols, 0), Scalar( 0, 255, 0), 4 );

//-- Step 11: Mark and Show detected image from the background

imshow("DetectedImage", img_matches );

waitKey(0);

return 0;

}

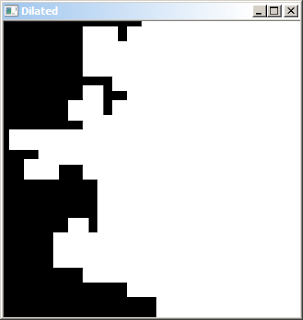

Input Object Image:

Background Image:

Output Image: