Digital Negative as the name suggests inverting the pixel values of an image such that the bright pixels appear dark and dark as bright.

Thus the darkest pixel in the original image would be the brightest in that of its negative.

A good example of it can be an X-ray image.

Now, Considering an 8 bit image.

The pixel value can range from 0 to 255.

Thus to obtain the negative we need to subtract each pixel values of an image by 255.

Hence for an k-bit image.

The pixel value will range from 0 to [(2^k)-1].

Thus we would have to subtract each pixel of an image by [(2^k)-1].

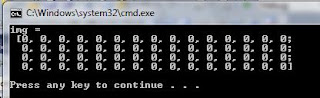

The below code is in opencv for digital negative of an 8-bit grayscale image:

OUTPUT IMAGE:

Applications:

It has various immense application in the field of medical in finding the minute details of a tissue.

Also in the field of astronomy for observing distant stars

Input Image:

Output:

Thus the darkest pixel in the original image would be the brightest in that of its negative.

A good example of it can be an X-ray image.

Now, Considering an 8 bit image.

The pixel value can range from 0 to 255.

Thus to obtain the negative we need to subtract each pixel values of an image by 255.

Hence for an k-bit image.

The pixel value will range from 0 to [(2^k)-1].

Thus we would have to subtract each pixel of an image by [(2^k)-1].

The below code is in opencv for digital negative of an 8-bit grayscale image:

// OpenCV Digital Negative Tutorial

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main()

{

const float pi=3.14;

Mat src1,src2;

src1 = imread("C:\\Users\\arjun\\Desktop\\image_opencv.jpg",CV_LOAD_IMAGE_GRAYSCALE);

src2 = Mat::zeros(src1.rows,src1.cols, CV_8UC1);

if( !src1.data ) { printf("Error loadind src1 \n"); return -1;}

for (int i=0; i<src1.cols ; i++)

{

for (int j=0 ; j<src1.rows ; j++)

{

Scalar color1 = src1.at<uchar>(Point(i, j));

Scalar color2 = src1.at<uchar>(Point(i, j));

color2.val[0] = 255-color1.val[0];

src2.at<uchar>(Point(i,j)) = color2.val[0];

}

}

namedWindow("Digital Negative Image",CV_WINDOW_AUTOSIZE);

imshow("Digital Negative Image", src2);

//imwrite("C:\\Users\\arjun\\Desktop\\digitalnegative.jpg",src1);

namedWindow("Original Image",CV_WINDOW_AUTOSIZE);

imshow("Original Image", src1);

waitKey(0);

return 0;

}

INPUT IMAGE:OUTPUT IMAGE:

Applications:

It has various immense application in the field of medical in finding the minute details of a tissue.

Also in the field of astronomy for observing distant stars

Input Image:

Output: